Technical architecture of the platform

Where can AI agents be applied?

AI technologies have transformed from experimental trends into business mainstream. Companies are massively implementing artificial intelligence to automate routine. Contact centers have strict requirements for AI: instant responses without delays, emotional intelligence to deal with dissatisfied customers, smooth handoff of complex cases to agents, support for all communication channels, and enterprise-level reliability.

For a leader who has decided to modernize the customer service system, choosing a service becomes a real headache. The market has hundreds of AI platforms from tech giants to unknown startups, and each has its own prices, capabilities, and pitfalls.

One of the services worth considering, in our view, is ElevenLabs. It is a specialized platform for voice communications. Unlike many services with “universal solutions”, ElevenLabs chose a different strategy. Instead of trying to create a platform “for everything”, they focused on one specific task — creation of a high-quality voice AI agent with minimal time spent on development and adjustments.

Technical architecture of the platform

What is ElevenLabs?

ElevenLabs — is a research and implementation organization in the field of voice AI, which approached the problem of creating voice agents from an unexpected side. They created ElevenLabs Agents Platform — a service to deploy fully configured conversational voice agents.

The advantage of the approach is especially noticeable when compared to the traditional method of creating a voice agent — a process that often turns into a complex and lengthy project:

- you need to choose a speech recognition system and hope that it understands your customers’ accents;

- select a language model so that it does not hallucinate;

- find a speech synthesis system and ensure that it doesn’t sound like a robot from 80s movies;

- hire a development team for six months to make all this work together.

With ElevenLabs, everything is simpler – they have created a ready-made solution.

Main components of the system

The ElevenLabs platform consists of four main components that work in synchronization with each other.

ASR (Automatic Speech Recognition) — a model that understands the context of a conversation, distinguishes technical terms, copes with background noise from an open office or street. Recognizes accents, understands when a customer speaks emotionally and quickly, and when he hesitantly chooses words. The model is trained on millions of hours of real conversations and is constantly being improved.

LLM (Large Language Model) — this is a neural network trained on vast amounts of text, which understands and generates human speech. In simple words, this is the “brain” of an AI assistant, which allows for meaningful dialogues, answering questions and solving tasks.

However, unlike many platforms that force you to use their own models, ElevenLabs offers a choice: Google Gemini, OpenAI, Anthropic, Custom LLM. The list of supported models can be found in the documentation.

In compliance with GDPR (General Data Protection Regulation), the platform offers an “EU data residency” mode — all data is stored and processed only on EU servers. When the mode is activated, some older versions of Gemini and Claude are unavailable, but Custom LLM and OpenAI work without restrictions.

TTS (Text-to-Speech) — is the brand’s voice. And here ElevenLabs truly impresses: more than 5000 voices in 31 languages. These are not robotic voices, but live speech with intonations, pauses, and emotional coloring. Most often, customers do not recognize AI in the first minutes of conversation — the quality of synthesis makes speech practically indistinguishable from human speech.

Custom turn-taking (turn-taking model) — makes the conversation natural. Turn-taking determines the moment of pause or the end of the user’s phrase and signals the AI agent when it is appropriate to join the conversation or pause the response, maintaining a smooth dialogue. This is one of the key technologies that makes conversation with a robot “alive”, without awkward pauses or interruptions. Unlike simple systems waiting for silence X seconds, this model understands context and intonations.

Speech synthesis models

ElevenLabs has four main speech synthesis models, each optimized for specific use scenarios.

Eleven v3 supports more than 70 languages with impeccable pronunciation, capable of conveying emotional tones — from sincere sympathy to professional enthusiasm. The model supports multi-voice dialogues, allowing scenarios with multiple characters. The only limitation is 10,000 characters at a time, which is approximately equivalent to 5-6 pages of text.

What is it for? For VIP support, premium services, image projects, where the quality of voice directly affects the perception of the brand.

Multilingual v2 supports 29 main world languages with stable quality, especially good for long monologues — for example, when it is necessary to read the terms of a contract or a detailed instruction. Also has a limit of 10,000 characters, and optimized specifically for stability, not emotional expressiveness. Ideal for standard support and international operations.

Flash v2.5 the delay is just 75 milliseconds. This model provides almost instant response. Supports 32 languages and has an increased limit of 40,000 characters. As a bonus — it is 50% cheaper than other models. Suitable for mass calls, handling simple requests, for example, “check balance”, or “order status” — wherever speed and cost are more important than emotional nuances.

Turbo v2.5 the system response delay is 250-300 ms, supports 32 languages, limit of 40,000 characters. This model provides an optimal balance between voice quality, response speed, and cost. Suitable for most contact center scenarios.

Although complete retraining of voice models for industry-specific terminology is not yet available, the platform offers effective tools to solve this issue.

- Pronunciation dictionaries allow you to adjust how AI pronounces complex terms, abbreviations, or brand names. For instance, if a product is called “XCloud” but customers are used to hearing “Ex-Cloud” rather than “Ik-Cloud”, you need to add this rule to the dictionary. The system will remember and use the correct pronunciation in all conversations.

- Alias tags work as a smart replacement — you tell the system to replace a technical term with its understandable analog. This is especially useful for internal product codes or services that sound different in conversation with the customer.

This approach solves most pronunciation issues without the need for expensive retraining of the entire model.

In addition to choosing a specific synthesis model, ElevenLabs supports the Multi-voice — the ability to use multiple voices for different departments or scenarios. For instance, the support department can speak with a calm male voice, the sales department — with an energetic female voice, and VIP support — with a light British accent. This function creates the effect of a real team, even if the customer talks to the same agent. Multi-voice can also be used to simulate a transfer of a call to a “senior specialist” or for agent training through role-playing scenarios.

Speech Recognition

Scribe-v1

This is not just a transcriber, but a full-fledged conversation understanding system, works with 99 languages. The detailed list can be found here.

For our Ukrainian business, it is important that the system understands the Ukrainian language, even with local pronunciation features. Moreover, it can handle situations when a client speaks in Ukrainian in one conversation, then switches to another language — the system automatically recognizes the language change and accurately records everything that was said. This solves a real problem for Ukrainian call centers, where agents have to work with clients in different languages.

Main functions of speech recognition:

- Word-level timestamps — records the time of every word in the conversation. Useful for analysis: you can quickly find the moment when the client asked for a manager or wanted to cancel a service;

- Speaker diarization function automatically separates voices of different people in the conversation;

- Dynamic audio marking – the system determines the emotional state of the speaker, notes pauses, interjections, uncertainty in the voice.

All system components work towards one result — creation of an AI agent, indistinguishable from a human operator. Natural speech, understanding context, correct pauses in a dialogue are gathered in one service without the need to assemble the solution from separate parts.

Scribe v2 Realtime

An advanced version of the speech recognition system, optimized for instant processing of conversations. Supports the same 99 languages as Scribe v1, including Ukrainian. Unlike the basic version that transcribes speech with a slight delay, the realtime version outputs text almost simultaneously with the utterance of words — the delay is less than 300 milliseconds.

Key features:

- Streaming processing — text appears as you speak, not waiting for the end of the sentence;

- Intelligent punctuation — automatically places dots, commas, and question marks in real-time;

- On-the-fly correction — the system can correct the beginning of the sentence when it hears the full context;

- Dialogue optimization — better understands conversational speech, interjections, and incomplete sentences.

ElevenLabs Scribe v2 Realtime can be implemented on both the client’s side and the server side. Detailed settings here.

Platform capabilities

Main Functions

The ElevenLabs platform offers a set of functions that cover the entire spectrum of requests of a modern contact center. But this is not just a list of features — each function is thought out from the point of view of practical application in customer service.

- Text to Speech (text to speech) — over 5000 pre-set voices in 31 languages are available. Supports cloning of real people’s voices and creating custom voice profiles. Application: voiceover of greetings, responses, notifications.

- Speech to Text (speech to text) — automatic transcription of audio to text with an accuracy of 95-98%. All conversations are saved in text format for subsequent analysis. Capabilities: search by keywords, statistical analysis of the frequency of terms, pattern identification of appeals.

- Voice changer (voice modification) — modification of voice parameters, tone, timbre, speech speed, emotional coloring. Adjustable parameters allow you to adapt the voice to different departments and usage scenarios.

- Voice isolator (voice isolation) — noise suppression technology and highlighting of the main voice. Filtering background noise up to -30 dB. Works with typical interferences: street noise, office background, household sounds.

- Dubbing (dubbing) — automatic speech translation while preserving intonations and tempo of the original. Supports synchronous translation into 31 languages. Translation delay: 200-500 ms.

- Sound effects (sound effects) — a library of audio elements for dialogue decoration. Includes: musical intros, switching sounds, waiting signals. Ability to upload own audio files.

- Voice cloning & design (cloning and designing of voices) — creating a digital copy of a voice based on 5-30 minutes of recording. The accuracy of reproduction is 85-95% by the MOS (Mean Opinion Score) metric.

- Conversational AI (conversational AI) — integration of all components for dialogues. Supports contextual understanding, dialogue state management, handling interruptions, returning to previous conversation topics.

Supported formats

The technical flexibility of the platform is demonstrated by a wide support of audio formats. This is important for compatibility with the existing infrastructure of the contact center.

PCM (Pulse Code Modulation) — this is an uncompressed audio format. The platform supports all popular sampling rates:

- 8 kHz for classic telephony;

- 16 kHz for wideband communication;

- 22.05 kHz for FM radio quality;

- 24 kHz for professional audio;

- 44.1 kHz for CD quality.

This means that no matter what equipment your call center uses — from old analog PBX to modern VoIP systems — the platform will work without problems.

μ-law (mu-law) 8000Hz — a classic compression algorithm for telephony, used in North America and Japan. If your contact center operates with legacy systems or must comply with the telecommunications standards of certain countries, support for μ-law is critical. It ensures compatibility with traditional telephone networks and old equipment that is still widely used in the industry.

Integration methods

ElevenLabs understands that each contact center has its unique technical infrastructure, therefore, offers numerous ways of integration:

- HTTP requests — a universal method through REST API. Sent a request — got a response. Simple and reliable;

- WebSocket — for communication in real-time without delays. A constant connection ensures instant data transfer in both directions. Necessary for live dialogues;

- Python SDK — a ready-made library for Python. Creating a voice agent in a few lines of code. Convenient for rapid prototyping and testing;

- Node.js libraries — libraries for JavaScript. Allow embedding agents in web applications, CRM systems, and operator interfaces.

The platform speaks the language of modern development and easily integrates into any technical infrastructure.

Practical deployment

Connecting a voice agent is more than just setting up the bot itself. The process includes reporting, monitoring, integrations, and other tools that form a complete communication system.

To start working, you need authorization in the system. On the elevenlabs.io website, there is a “Sign Up” button. The registration requires only an email and a password — no lengthy forms or credit cards. After confirming the e-mail, access to the platform is open.

Creating an agent is done in the dashboard through the “Create Agent” button. This is a clean configuration, which is set up for specific tasks:

- Agent name can be anything — “Support Service”, “Product Consultant”. The system automatically generates a unique ID for each bot.

- Language settings include the main interface language and additional languages between which customers can switch during a conversation.

- Welcome message determines the agent’s first phrase. With an empty field, the agent will wait for the client to start the dialogue.

- System prompt sets the agent’s personality and the context of the conversation — this is the main instruction that defines AI behavior.

- Voice – more than 5000+ voices in 31 languages with key parameter settings: Stability (delivery stability from emotional to monotone), Similarity Boost (closeness to the original) and choice of synthesis model. You can create a pronunciation dictionary for specific terms and clone any voice from 5-30 minutes of recording.

- Dynamic variables – allow embedding runtime values into agent messages, system prompts, and tools. This makes it possible to personalize each dialogue, using data specific to the user, without creating multiple agents.

- Knowledge base – you can upload files or add website links (limited in the free version).

- Retrieval-Augmented Generation (RAG) — this technology allows a voice or chat agent to access large knowledge bases during a dialogue. Instead of loading the entire document into the context, RAG retrieves only the most relevant fragments of information for the specific user query. In ElevenLabs, this process is automated — just activate it by moving the slider in the agent settings, and each document from the knowledge base must exceed 500 bytes. After activating RAG, all added files are indexed, and the knowledge base is split into small fragments (chunks), usually 100–500 tokens. Each chunk represents a logically completed paragraph or section of text. Thanks to this, the agent can find the necessary information faster and provide more accurate, contextually relevant answers. However, RAG also has limitations:

- It does not determine which data is newer or more relevant;

- Cannot automatically resolve contradictions between document versions (for example, if one file states “14 days for return”, and another — “30 days”);

- Does not check logical consistency of information — the model may receive conflicting data and give a contradictory answer.

- Tools – this block provides AI agent access to additional actions, for example: to finish the dialogue first, determine the language, transfer the client to another AI agent or phone number for connecting with an operator.

After clicking the “Test Agent” button, a conversation simulator opens, where you can ask typical customer questions. The agent responds using the uploaded information. As a result, a working AI agent is obtained in 15 minutes. This is just the initial stage, further optimization, customization, and integration are ahead, but a prototype is available in just a quarter of an hour.

Secure access — no less important aspect. Each agent has a unique identifier (Agent ID), which should be stored like a password: do not publish or transmit openly.

In ElevenLabs, you can enable authentication through API keys, OAuth, or JWT tokens to prevent unauthorized connections. For corporate users, access can be restricted by IP addresses, allowing connections only from trusted networks.

Where can AI agents be applied?

In practice, AI agents are already used in different industries:

- In support services, they take care of inquiries, solving standard questions without operator involvement;

- In retail, they help in selecting products and tracking orders, acting as personal consultants;

- Internal AI assistants in companies remind about meetings and find necessary documents;

- In online education, AI agents work in the format of interactive tutors, who explain topics, ask questions, and check understanding of the material.

It’s important to understand the main point — AI agents are not taking jobs away from operators, but freeing them from routine. Instead of cuts, employees get new roles:

- AI trainers — train and improve AI agents;

- Workflow designers — create scenarios without programming;

- Escalation specialists — work only with complex cases;

- Conversation analysts — analyze 100% of dialogues instead of 2%, but not manually, through AI agents.

AI agents are not a threat, but a tool for the evolution of contact centers. The more tasks artificial intelligence takes on, the more time operators have to solve really important issues, rather than mechanically following scripts.

Analytics and monitoring

When we hear words “monitoring, quality control, analytics” the image of a supervisor who spends hours listening to operators’ conversations and making notes in scorecards immediately comes to mind.

Traditional quality control is the selective listening of 2-5% of calls, where the result depends entirely on the assessment of the auditor. It’s expensive, subjective and covers a minuscule part of real interactions. And here ElevenLabs turns this paradigm around by automatically assessing 100% of conversations.

No, this doesn’t mean you no longer need QC, just now supervisors stop being “listeners” and become data analysts and quality strategists.

The ElevenLabs system takes over the routine — it automatically analyzes every conversation, evaluates the achievement of goals, and records where the AI agent coped and where it did not.

General principle of operation

After the completion of a call, ElevenLabs automatically creates a transcript of the conversation, and then analyzes it according to the parameters you defined yourself.

The system does not “guess” what to look for — it follows your scheme from the “Analysis” section.

In the AI agent settings, you can set metrics for evaluating a conversation — Evaluation criteria and characteristics of data for extraction — Data extraction.

Such an evaluation system provides transparency and accuracy of analysis. It eliminates the human factor and subjective assessments. Instead of selective control, as in the classical QC, here 100% of conversations are analyzed.

Metrics and evaluation of conversations

The section Analysis → Evaluation settings — is the quality management center. Here, the system is defined by which it evaluates the effectiveness of AI agents and the quality of customer service. These are the very evaluation sheets familiar to any supervisor, but now there’s no need to listen to calls and manually fill in tables.

Basic analytics are available starting from the “Pro” tariff- binary evaluation of the result (success/failure), basic sentiment analysis, three preset criteria, and simple statistics of success. With the choice of more expensive tariffs – the functionality of the tool expands:

- Scale – evaluation on a scale of 1–10, up to 20 criteria with customizable weights, tracking CSAT/NPS, and analyzing reasons for failures;

- Business – all previously mentioned functions + A/B testing, agent comparison, and automatic recommendations;

- Enterprise – unlimited + custom ML models, integrations, and predictive success rating.

Each evaluation criterion is a clear rule, according to which the system will assess the conversation. You can set one or several conditions, depending on your business tasks.

For example:

- “Problem solved without operator involvement” — a key indicator for an AI agent. If the client was not transferred to a “live” employee and meanwhile received a solution, the conversation is considered successful;

- “Client confirmed the solution” — the system looks for phrases like “Thank you, everything is clear”, “Yes, the problem is resolved”, “Excellent, everything works”. This signals that the case is closed;

- “Conversation time less than 7 minutes” — helps to track efficiency. The threshold can be set to anything, for example, 10 or 15 minutes;

- “Client’s emotion at the end — positive” — AI determines the tone of the client’s speech (by words, context, and intonation, if audio analysis is enabled). If the finale is positive — score in plus.

After the conversation is concluded, the analysis process starts automatically. First, the system creates a transcript of the conversation — a complete text transcription of the dialogue. Then, it divides it into semantic blocks: greeting, clarification of details, solution search, and concluding communication. Each of these fragments is compared to the set evaluation criteria, to determine whether the requiredconditions have been met — whether the problem is solved, whether the client is satisfied, whether the conversation was within time limits.

After the evaluation, the system not only provides the final result but also an explanation for it — a sort of justification. If the conversation received a low score, ElevenLabs shows why exactly: for instance, the agent didn’t confirm if the client was satisfied with the solution, didn’t suggest an additional assistance option, or concluded the conversation prematurely. Thus, the platform not only assigns a score but also helps understand the reason for the mistake and the point for improvement.

Data extraction from conversation

The main setup is located in the section Agent → Analysis → Data extraction.

Here a schema is created (usually in JSON format), describing exactly what needs to be extracted from the conversation.

After that, each call is automatically analyzed — AI goes through the transcript and fills in these fields. If there was no relevant information in the conversation, the field remains empty.

In essence, Data extraction — is the brain of the analysis. It determines what is considered “data” and what is just conversation text. With this tool, you can extract:

- customer data (name, phone, order ID);

- the essence of the appeal (“payment problem”, “feature request”, “complaint”);

- emotions (negative, positive, neutral);

- conversation outcome (“question resolved”, “awaiting confirmation”, “escalated”);

- additional details — product, city, reason for return, etc.

The function automatically recognizes and saves basic information: names, contacts, dates, and brief summaries up to 100 words. Data is saved only in the internal storage with the possibility of manual export to CSV. With the choice of more advanced tariffs, extraction possibilities significantly expand:

- Scale — up to 50 customizable fields with regex patterns, extraction of complex structures (addresses, order numbers), detailed summaries, and automatic identification of key phrases. Data is transmitted through webhooks in real-time, API access and auto-export to Google Sheets;

- Business — unlimited fields, working with complex business objects, multi-turn extraction (collecting data from several utterances), built-in validation, and OCR (Optical Character Recognition) of documents. Direct integration with CRM systems, database connectors, and cloud storage;

- Enterprise — extraction based on AI with customizable NER models, linking data between conversations, and automated personal data processing. Integration with any corporate systems, deployment on private servers, and HIPAA-compliant storage for medical data.

Thus, Data extraction in ElevenLabs — a tool that turns regular conversations into structured data, understandable to the system and useful for the business.

What does the system do after analysis?

After AI has extracted the data, all information is saved in the section Evaluate → Conversations, where you can open a specific call and study the result in detail. Extracted information is automatically structured — the system shows the topic of the conversation, the client’s emotions, and the outcome of the interaction.

After this, Post-call scenarios are triggered: ElevenLabs can automatically create a ticket in Zendesk, Jira, or another system, update data in CRM, send a follow-up message to the client, or activate a webhook for custom automation of further processes.

Limitations and technical nuances

- ElevenLabs analyzes only what is set in the settings, no “magical” recognition exists;

- One conversation is analyzed up to 10,000 characters of text (long ones are cut into parts);

- Maximum fields for extraction — 20;

- Conversation length and available functionality for analysis:

- up to 10 mins on Starter and only basic statistics;

- up to 60 mins on Pro – includes Data extraction, conversation evaluation, Webhooks, and CRM integrations;

- unlimited on Enterprise- adds advanced reporting, API to the functionality available on the Pro version;

- Analysis takes from 1 to 5 minutes after the call has ended.

Real analytical functionality appears only with the Pro tariff.

In practice, analytics in ElevenLabs — it’s not “smart monitoring that understands everything on its own”, but a customizable system, which does exactly what it’s told.

If you set the right fields and clear criteria, it turns into a powerful tool for analyzing 100% of conversations. If not, it just creates transcripts without any meaning.

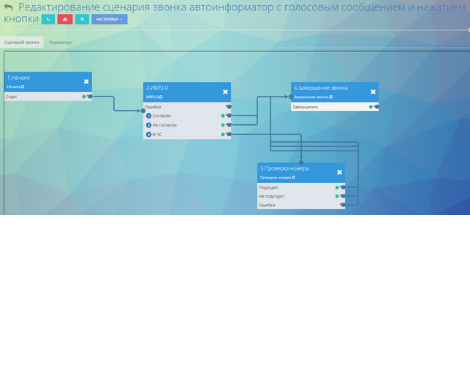

Workflow in ElevenLabs

Workflow — is an integrated visual constructor within the platform for creating complex scenarios of AI agents. This is not a separate tool but part of the unified agent management system.

To create or modify a working scenario, open the Agents Platform panel, select the required agent, and go to the Workflows tab. Here you can click Create New Workflow, to create a new scenario, or select an existing one for editing.

Features of ElevenLabs editor:

- Drag-and-drop interface without code — scenarios are assembled visually, without the need for programming;

- Real-time preview — you can immediately see how the agent will execute the given actions;

- Integration with Test Agent for testing — allows you to test the scenario without real calls;

- Change monitoring — the system automatically saves changes, and if necessary, you can revert to any previous version.

Logic and structure of Workflow: Conditions and Nodes

In ElevenLabs Workflow, all work is built on the principle of “condition → action”. It’s not just a sequence of steps, but a flexible decision-making system, where the agent analyzes the context of the conversation, the state of the client, and data from external systems to choose the right action in real-time.

Conditions (transition conditions)

Conditions define when and under what circumstances the agent should move to the next step of the scenario. It’s the brain of the Workflow, analyzing the conversation context and directing the agent’s behavior. In ElevenLabs, four types of conditions are supported:

- LLM conditions — based on understanding the meaning of the client’s statement through the language model (GPT, Claude, Gemini). The agent identifies the intent, for example, “return a product” or “talk to an operator”, and triggers the appropriate branch.

- Tool results — logic that depends on the response from an external system. For example, if API returned “payment confirmed” — the agent announces the result; “error 404” — suggests help from an operator.

- System variables — internal platform variables: conversation duration, language, emotional tone of the client, time of day etc. For instance: “if the conversation lasts more than 10 minutes — transfer to the operator”.

- Custom rules — user-defined rules through the knowledge base or agent prompts. They allow adding specific scenarios like: “if a discount is mentioned and the client is irritated — offer a coupon”.

Thus, conditions are responsible for analyzing the situation and choosing the appropriate scenario.

Nodes (action nodes)

Nodes determine exactly what the agent should do when a condition is triggered. Each node is a specific action or stage of the conversation.

- Subagent node — a unique feature of ElevenLabs, allowing to “on-the-fly” change the agent’s behavior: switch the voice, select another LLM (GPT, Claude, Gemini), or knowledge base — all without interrupting the dialogue.

- Tool node — responsible for interaction with external systems. Supports dynamic variables ({{customer_name}}, {{order_id}}, etc.), which are automatically inserted into requests to API or webhooks.

- Transfer node — used to transfer the call to an operator. In this case, the system automatically forms a brief summary of the conversation and sends it through a webhook to a CRM or ticket system, so the operator immediately sees the context.

- End call node — concludes the conversation and initiates post-processing: saving the transcript, evaluating quality, and analytics.

Workflow works like a decision tree: agent receives data → checks conditions → selects the corresponding node → performs action → returns to analysis.

This structure makes scenarios not linear, but intelligently adaptive — the agent reacts to the real intentions of the client, rather than simply following a predefined script.

Automation of workflows is partially available with the Pro tariff — basic triggers, email notifications, and simple “if-then” conditions. However, the critically important function of Agent Transfer (transfer of calls to operators) is absent in this plan. Real capabilities open up on higher tariffs:

- Scale — transfer of calls to operators by keywords or type of request, priority queuing. Multi-step scenarios with conditional logic, scheduled actions, automatic re-calling. Full integration with Zapier, Make, API for reading/writing, Slack and Teams;

- Business — multi-agent routing with skill-based distribution, load balancing, backup agents. Complex branching, parallel processes, customizable triggers, and bulk data processing. Direct integration with Salesforce, HubSpot, Zendesk; Complex workflows with conditional logic and parallel processes; Mass operations for data processing; Backup agents for service continuity.

- Enterprise — omnichannel routing (voice, chat, email in a single system), AI-based rotation based on historical data, event-based and microservices architecture; Integration with corporate systems (SAP, Oracle, and others); Possibility of local deployment for working with critical data; Customizable escalation scenarios with flexible logic; Global routing rules for international operations. Developed individually for companies with large volumes. If you process 6+ hours of conversations daily (10,000+ minutes a month), it makes sense to discuss special conditions. Enterprise clients get not only the best prices but also personal support, individual SLAs, priority in request processing, and the ability to customize functions.

Omnichannel communications

The modern customer does not want to be limited to one communication channel. In the morning he calls, in the afternoon writes in chat, in the evening speaks through a widget on the site. True omnichannel is not just the presence of different communication channels, but their seamless integration into a single system.

Telephone integrations

ElevenLabs integrates with any telephone systems — from traditional office PBX to modern cloud platforms.

SIP Trunking — ElevenLabs is compatible with most standard SIP-trunk providers, including Twilio, Vonage, RingCentral, Sinch, Infobip, Telnyx, Exotel, Plivo, Bandwidth, and others supporting SIP protocol standards.

Technical details:

- Supported audio codecs: G711 8kHz or G722 16kHz;

- TLS transportand SRTP media encryption are supported for enhanced security;

- Static IPs are available for corporate clients requiring a whitelist of IP addresses.

Twilio — native integration for handling both inbound and outbound calls.

Two types of Twilio numbers:

- Purchased Twilio Numbers (full support) – support both incoming and outgoing calls;

- Verified Caller IDs (outgoing only) – you can use existing business numbers for making AI outgoing calls.

Phone functions

Transfer to agent – supported for transferring to external telephone numbers through SIP trunking and Twilio. There are two transfer methods: Conference Transfer and SIP REFER

Batch Calling (mass calling) – available for numbers connected through Twilio or SIP trunking.

ElevenLabs works with virtually any telephony system, if it supports SIP (this is 99% of modern PBXs), you can connect AI agents without replacing equipment.

Widget Integration – AI agent on the website

If telephony is a well-known tool for contact centers, then web widgets are the present and future. ElevenLabs offers integration of AI agent on your website. Fundamentals are so simple that even a marketer without technical background can handle it. A piece of code needs to be placed on the website, in the <body> section and the main <index.html> file, to ensure access to the widget on all pages. In the management panel, you can adjust colors, sizes, and positioning of the widget in line with the web page design. For more advanced users, an SDK is available for full control over settings.

Widget supports three modes of operation:

- Voice-only for those who prefer to speak — handy on mobile devices where text entry is inconvenient;

- Voice+text allows switching between modalities — start with voice, then switch to text when ending up in a noisy environment;

- Chat mode suitable for “quiet” offices or late hours when speaking is not convenient.

The widget covers basic needs: self-service for customers, lead collection, and quick support without waiting for an operator. It’s no longer just a chatbot in the corner of the screen, but a fully-fledged voice assistant integrated into your website.

Choosing the optimal channel for each task increases efficiency. Telephony remains for complex emotional issues, where empathy is important, while Widget is ideal for self-service scenarios, when the customer himself wants to find information.

Pricing

ElevenLabs offers a transparent and predictable pricing model that scales with your business. No hidden fees, complex calculators, or unexpected bills at the end of the month.

Tariff plans

Cost optimization mechanisms

ElevenLabs understands that the introduction of new technology requires experiments and adjustments, therefore, offers several ways to significantly save costs.

- Setup & Testing mode — All setup and testing operations are billed at half the cost. You can experiment with prompts, test various scenarios, conduct load testing — and pay half less;

- Intelligent billing of pauses — a solution for real conversations. When the silence in the conversation exceeds 10 seconds, the platform automatically reduces the intensity of the turn-taking and speech-to-text models. These silence periods are billed at only 5% of the regular cost. The client went to fetch documents for 2 minutes? You pay as for 6 seconds. In real conversations, pauses account for 20-30% of the time, which gives significant savings.

- Text mode opens vast possibilities for optimization. Chat-only conversations have limits of concurrency 25 times higher than voice calls. If your plan allows for 20 concurrent voice calls, then text chats can be up to 500. For simple requests like “check balance” or “order status”, text mode is ideal — fast, cheap, effective.

Additional expenses

It’s important to understand the full picture of costs, including additional expenses, about which the platform honestly warns.

- LLM costs (costs for language models) operate on a pass-through pricing principle. To your main ElevenLabs subscription bill, the cost of used LLM tokens is automatically added, calculated according to the official rates of the selected model’s provider. Depending on your needs, you can select: GPT-4 from OpenAI — adds approximately $0.01-0.03 per minute of conversation, an optimal balance of quality and cost. Claude from Anthropic may be more expensive, but provides higher quality answers. Google Gemini often turns out to be the most economical option.

The number and cost of sent and received tokens can be tracked for each conversation separately — the information is displayed in the conversation metadata; - Multimodal mode — this is when the client can both speak and write in one conversation. For example, started with a voice, then switched to text (went into a subway), and then again voice. How is billing calculated: voice — you pay for minutes of conversation, text — you pay for each message.

ElevenLabs is a ready-made ecosystem for creating voice AI agents, capable of replacing months of development with a few weeks of setup. The platform combines quick deployment, transparent pricing, and powerful enterprise-level functionality while remaining accessible for businesses of any scale.

It does not perform miracles “right out of the box” — it requires proper configuration, a quality knowledge base, and regular optimization. But with the right setup, ElevenLabs becomes a reliable digital employee, taking over routine tasks, leaving people to handle tasks where empathy and creativity are important.

ElevenLabs demonstrates how voice AI technologies are moving from the realms of experiments into manageable solutions. It’s a step towards a new architecture of communications, where the machine does not replace the human but becomes his assistant.